An Interview...

Terrence Deacon, Ph.D. – The Co-evolution of Language and the Brain

Dr. Terrence Deacon is professor of Biological Anthropology and Linguistics at University of California-Berkeley. His research combines human evolutionary biology and neuroscience, with the aim of investigating the evolution of human cognition. His work extends from laboratory-based cellular-molecular neurobiology to the study of semiotic processes underlying animal and human communication, especially language. He is the author of The Symbolic Species: The Co-evolution of Language and the Brain. Additional bio info

Dr. Terrence Deacon is professor of Biological Anthropology and Linguistics at University of California-Berkeley. His research combines human evolutionary biology and neuroscience, with the aim of investigating the evolution of human cognition. His work extends from laboratory-based cellular-molecular neurobiology to the study of semiotic processes underlying animal and human communication, especially language. He is the author of The Symbolic Species: The Co-evolution of Language and the Brain. Additional bio info

The following interview with Dr. Terrence Deacon was conducted at the studios of KCSM (PBS) Television in San Mateo, California on September 5, 2003. About his book, The Symbolic Species: The Co-evolution of Language and the Brain, David Pilbeam, professor of anthropology at Harvard University said: "This superb and innovative look at the evolution of language could only have been written by one person . . . Terrence Deacon. An extraordinary achievement!" Dr. Deacon is a renowned neuroscientist whose work on the evolution of language and the brain provides an important backdrop for understanding the neurological challenges involved in learning to read. Our conversation with Dr. Deacon stretches from the origins of language and consciousness to the problems of automatizing the symbolic processing necessary for reading.

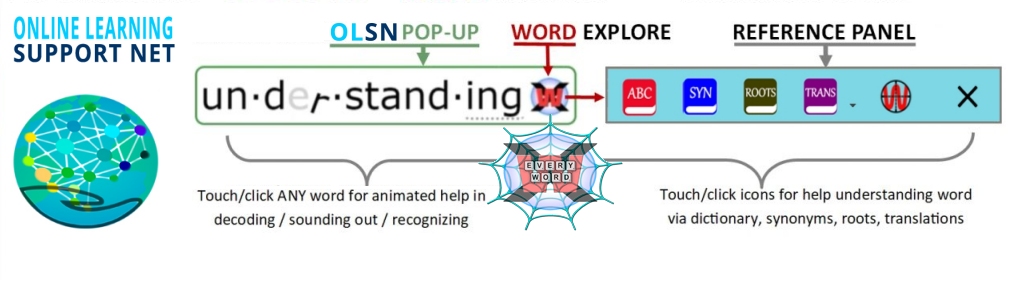

Note: Remember to click on any word on this page to experience the next evolutionary step in technology supported reading.

Note: Remember to click on any word on this page to experience the next evolutionary step in technology supported reading.

-----------------------------

David Boulton: I’d like to begin by giving you a chance to speak to the essential things that are most exciting and interesting to you. Tell us about your passion for your field and give us a sketch of your experiences.

Personal History - Study of Brains and Language:

Dr. Terrence Deacon: I began the study of language and the brain mostly because it is the most fascinating subject around with the biggest problems. Of course to look at its evolution is sort of the real challenge, the real golden egg one searches for. The challenge for me as a young student was that there was really no place I could go where people were actually studying brains to understand how they evolved. There were a lot of people studying how big brains were and things like that but there was not a lot of neuroscience when I began as a young student directed in that particular enterprise. That became a challenge for me and I did all that I could to study both the linguistic side of things, the communication side, but also the neuro-anatomical side of these questions.

It turns out that, of course, the study of brains and the study of language can’t be done in one field. It really requires that you sort of dabble in many fields and become perhaps a master of none. The danger, of course, is that you get a superficial picture. But you get a superficial picture if you’re just in one area as well. I have tried throughout my career to sort of walk that middle ground and ask the question, “What do I need to know to answer these questions?” Most of my work was in the neurosciences. I began in the earlyeighties by trying to adapt some of the new technologies for tracing connections in brains that I thought might be a way to go at the question.

In fact, some of my early work traced the connections in monkey brains that corresponded to the language connections in human brains. That’s what really got me on my path, partly because a surprise came of that work. We suspected that the areas in human brains where we find language connections would be quite different in monkey brains. The surprise was that, as far as we could tell, the plan was the same plan. The way these areas were connected, even areas that we identified as language areas, or the correspondent areas in monkeys, had the same kinds of connections. It was a baffling finding and, in fact, the more we got new data about humans brains the more we found that the data we had picked up on monkey brains, and how they were organized, actually predicted the connections and the functionality of these language areas and how they were distributed.

So, a brain that didn’t do anything like human brains do in terms of communication and vocalization and so on, nevertheless seemed to have the same organization. It was one of the first big turning points, I think, in my work in realizing that somehow we would have to explain how the same kind of computer, (I think computer is a bad metaphor but it’s the one we have), how the same kind of device was running a very different kind of software, so to speak. How it could be, how the tweaks in that system might have worked. To study that I went off in a number of directions, more mapping of brain areas in primates trying to understand their links to humans, but also much more comparative work, and also developmental work. I spent a good deal of the last decade studying how brains develop because, of course, brains don’t evolve from adult stage to adult stage. What happens is embryology changes over the course of evolution and that changes the resultant. That area also has been full of surprises as we pursued it forward in these last couple of decades.

The Self-Organizing Brain:

Dr. Terrence Deacon: Most surprisingly is that brains are not, it’s hard to say this but, brains are not designed the way we would design any machine. They are not built the way we would build a machine. We don’t take the parts and put them together to build a whole. In fact, what happens is just the other way around. The whole starts out, it’s just undifferentiated. The parts aren’t distinguished from each other and they become more and more different from each other. The system becomes more and more complicated. The problem is the process is very indirect.

It is not like building something from a plan. It’s a very indirect process. It became very clear that this process is very much what we would call today, self organization. A lot of the information that goes into building brains is not actually there in the genes. It’s sort of cooked up or whipped up on the fly as brains develop. So, if one is to explain how a very complicated organ like the brain actually evolved, changed it’s function to be able to do something like language, one has to understand it through this very complicated prism of self organization and a kind of mini-evolution process that goes on as brains develop in which cells essentially compete with each other for nutrients. Some of them persist and some of them don’t. Some lineages go on to produce vast structures in the brain. Other lineages get eliminated as we develop, in some ways just like a selection process in evolution.

Like evolution, it’s a way of creating information on the fly – a sort of sampling the world around you, in this case the body as well as the external world and adjusting yourself to it. In some ways the brain has that character, too. The complication of all of this has made me realize that the problem of language is a problem that’s not going to be ultimately solved by a kind of engineering model, by a kind of reverse engineering. We are going to have to think, in a sense, like biology thinks, like embryology works in this kind of self organizing, self evolving-like logic to get back at what actually causes things to occur and develop in complex ways.

I think this is the case with language itself. As it’s passed from generation to generation it has a kind of self organizing and evolution-like character to it.That is also part of where the structure comes from, where it picks it up on the fly, so to speak. We have to work that into our stories. That is really the impetus for the book I wrote. The Symbolic Species captures the essence of that idea in its subtitle, The Co-evolution of Language in the Brain. Ultimately, my argument was that language itself was part of the process that was responsible for the evolution of the brain. I mean that in the following sense.

Imagine the evolution of beavers. Beavers are aquatic animals today but they are aquatic because of what beavers in the past have done. That is, beavers have created their own world to some extent. They’ve created an aquatic world by building dams and blocking up streams and turning them into small lakes. Beavers’ bodies have evolved in adaptation to the world that beavers created. It’s a kind of complex ratcheting effect in which what you do changes the environment that produces the selection on your body.

I think language is, in a sense, our beaver dam. Language has changed the environments in which brains have evolved. That changes the picture radically because now one can look at the brain, so to speak, with an inside out perspective of the problem and ask the question, “What’s different about human brains and how might that difference tell us something about the forces that shaped it?” If those forces include language then the brain itself is a wonderful signature, a wonderful trace for the forces that helped it evolve in this complicated interaction. The title, The Symbolic Species, captures this notion that we are a species that in part has been shaped by symbols, in part shaped by what we do. Therefore, our brain is going to be very different in some regards than other species’ brains in ways that are uniquely human.

A Different Kind of Brain:

David Boulton: There are so many things to talk about. I’m both interested in the general work that you’re doing and how I can relate that to the story that we are telling. You mentioned that you can see structures in the monkey brain that predict or that correspond to what you see in the human brain. Obviously, the human brain is doing complex things the monkey brain is not doing. Does that suggest some other more extended, comparatively more virtual overlay, that’s involved in the difference rather than a genes-driven neuro-anatomical organization?

Dr. Terrence Deacon: The change in the human brain has to be a physical change. That is, the reason that we do what we do and other species don’t with respect to language is clearly something about human brains. In this regard I think there can be no disagreement that there’s something built in to us, something innate that makes us language ready. I think there is no question about that.

The real question is what kind of thing? What would we have to do, or what did evolution ultimately do to make this brain language ready? There are all kinds of different ways you could look at that question.

One is that it built it all in. If I was an engineer I would find all the parts that are necessary to make language and stick it in there, stick in these new parts. The fact that we’ve found no new parts, per se, suggests that that’s probably not the right way to go about the question. But also there’s not a clear sense in which you could look at it as something like a software addition, as if we took the same old computer and put in new software because in fact the computer, so to speak, is different. It’s a really different kind of brain.

So for me, taking the inside out perspective and looking at brains for clues to language rather than the other way around, I ask the question this way. This is probably the most significant departure from any kind of behavior that we’ve seen in any other species and it’s radically different in many ways from what other species can do. Such a large-scale difference and probably the biggest difference between us and other species ought to be reflected in some of the biggest differences between human brains and non-human brains.

With that as a kind of guiding hypothesis, not even a hypothesis, a sort of heuristic as to what to look for, I ask the question: So what is different about human brains, if one could categorize in a systematic way, what is really different about human brains? Wouldn’t that tell us something about language? That’s really been the guiding strategy, to some extent, for what I have done over the years. What that amounts to is we, first of all, have to look at the big things, the obvious things. I say big in a sort of punish sense because what’s really different about human brains is of course they’re bigger. We have always assumed that that’s just some sort of general story about intelligence. The truth is we don’t know much more now about intelligence and what its relationship is to whole brains than we did a century ago. We don’t really know how whole brains work yet. We don’t have a sort of general theory that everybody agrees upon, or even is close to agreeing upon.

Brain Size Differentiation:

Dr. Terrence Deacon: On the other hand, this major change, if it’s not just blowing the thing up larger and adding a whole lot more to everything, if it is somehow distributed in an interesting way, that distribution of how the same parts got changed and enlarged with respect to each other might tell us something about what that difference is. In fact, it tells me something very important and that is when you change the size of something you also change the relationships between the parts. We know that a small business, for example, can have one kind of organization for doing things whereas a large business, ultimately just simply because of its size, has to have all kinds of middle managers and different kinds of bureaucracy and people don’t get to talk to everybody. The brain is a different kind of brain because it’s bigger. The connections are going to be different because it’s bigger. That is going to be a clue to it as well.

I began to look at quantitative issues and with a developmental perspective, how changes in size might change the circuits, that is, how circuits might respond to this. I think for me that has been the biggest source of insights, the biggest source of clues. They’re not answers, they’re clues. That is, I think that the connections are different because the brain is bigger and because not all parts expanded at the same rate. I think that’s the first inside out clue to what is important about this language difference. What was the change in the hardware that supported this new kind of communication and cognition?

David Boulton: So, would you say that there was a kind of morphic resonance between the evolution of language and the structures in it and the evolution of the structures in the brain – that there is this kind of resonant co-evolution in the process?

Co-Evolutionary Processes:

Dr. Terrence Deacon: I think clearly there was a co-evolutionary process in which language affected brains. The issue is that language changes at a much faster rate than brains. Clearly the language we have today and the kinds of languages that are all over the world were not always the way they are. We undoubtedly passed through not maybe one stage of what you might call a proto language but probably many proto languages, many forms of this linguistic symbolic communication system over the course of our evolution, all of them leaving somewhat of a trace.

David Boulton: In here, but not out there.

Dr. Terrence Deacon: Yes, inside but not externally. That’s one of the real problems, of course, in studying this. The only trace is in brains that are alive today. We don’t see a trace in the external world. What traces we do see in the archeological record about human evolution are not necessarily useful and directly correspondent maps of what went on the inside of humans. As we know, across the world, people with equal intelligence, equally complex language can be living in radically different cultures with radically different kinds of technologies. Those that can look as the stone age of a million years ago, those that can look as modern as we are today sitting in this studio. The same brains can be producing all of those systems, in part because it is not all inside the head.

Old Logic of the Brain:

Dr. Terrence Deacon: I want to say one other thing about this correspondence between language and the brain. An engineer might look for something that maps to verbs, maps to nouns, maps to the past tense, all of the various features that linguists tell us that language is broken up into. But language, of course, is broken up according to a logic that has to do with communication, has to do with symbols, has to do with the constraints we have on interacting, perhaps, with speech sounds, perhaps with gesture. The logic of the brain is a very old logic and a very conserved logic. It’s the logic of embryology. It’s the logic of self organization. In fact, it’s the logic that has been shared with a common ancestor that goes back well before vertebrates.

We can find hints as to the organization of the genes that develop brains in fly brains. That logic has probably not changed much. That logic is the logic of the organization of brains. There’s unlikely to be a nice, neat direct map between what we see in the external world of language and what we see inside brains. In fact, the map may be very, very confused and very, very different inside the brain, that is, how the brain does what we see externally in language.

David Boulton: So, the brain is creating out into the world what’s becoming the environment that it is adapting to and language is one major field that it’s creating in.

Dr. Terrence Deacon: Yes. So, in one sense, we should expect the brain to reflect features of language, the features that it’s created. For example, in the metaphor that I used about brains and beaver dams: beaver bodies don’t necessarily reflect beaver dams, they reflect the environment that was created and the demands of that environment. Those demands are not necessarily directly represented in the environment. Beavers are aquatic. Beavers have all kinds of special adaptations for living in water. It’s not easy to predict from what’s produced in the world what’s going to happen inside, so to speak, in the body. I think we can say the same about this troubling and complex dynamic in which language evolution and brain evolution are working in tandem interfering with each other, confusing each other, shaping each other. That dynamic is probably one that is not going to be easy to predict.

On the other hand, there are a few real general things that we can probably say are consistent. We can say about beavers that whatever their adaptations are going to be, they’re going to be aquatic adaptations. There are some general things about living in the water that you need to know: swimming, breathing, communicating, so on and so forth. One can say that there’s going to be some general things that have to go along with linguistic processing. The symbol processing problem, the automatization, (that is the speeding up of the automatic running of syntax and of analysis), the mnemonic problems, the short term memory problems associated with it – these are things that are going to be generally there. And of course, typically the constraints of producing and hearing sound, or producing it visually and manually and interpreting it visually. All of those are things that we could predict so in one sense we can use general characters of language to make predictions. It’s probably not going to be very successful if we try to use specific characters of language to make our predictions about brains.

David Boulton: Can you see the physical trace of cognitive boundaries in the brain?

Dr. Terrence Deacon: The issue again is that the logic of how brains get built and the logic of how languages get built are different logics.

David Boulton: Right.

Dr. Terrence Deacon: The logic of brains is this embryology logic that’s very old, very conserved. The logic of language is something that is brand new in the world of evolutionary biology. It happened in one species recently, at least in evolutionary terms. In that regard, it will be a superficial trace, a tweak, so to speak, on this general pattern. That means that a lot of the details one would expect to find from language are not going to be mapped in any simple, obvious way onto the brain. Therefore, the kind of cognitive distinctions one might expect in language are probably not going to have easy, simple correspondence in functional and structural changes in the brain.

David Boulton: But there is a relationship. I mean, language is stretching our cognitive boundaries.

Dr. Terrence Deacon: Language clearly forces us to do something that for other species is unnatural and it is that unnaturalness that’s probably the key story. One might want to ask: What is so different about language? What are the aspects of language that are so different from what other species do? To answer the question, what should that imply about how brains have adapted to deal with that?

Single Most Distinguishing Attribute:

David Boulton: So, from a human evolution point of view you would agree with those that basically say that the development of language is the single most distinguishing attribute of human beings today?

Dr. Terrence Deacon: I do think that the development of language is the single most distinguishing attribute. Now that doesn’t mean speech, necessarily. It means the support system that’s around language – the symbolic system. Of course our communication is much more than linguistic. Some of it is very old also. But a lot of culture is also linguistic-like in a variety of ways and clearly that has been around a long time.

The ritual, the mythology…simply ways of doing things that are organized conventionally, symbolically – this is a hallmark of our species. We have, in a sense, transformed and even reinterpreted much of our biology through this system. So much of what we do, whether it’s marriage, warfare, or whatever, has been transformed by this tool that has, in a sense, taken over and biased all of our interactions with the world.

David Boulton: I have long wondered what it must have been like to not be verbally self reflexive, to not be aware of myself in this mirror of words, this self talk story that’s going on. And yet it certainly seems that at some point human beings weren’t doing that at the level that we do it now, and, at some other point they were. That is a hugely significant threshold, regardless of where you place it in the evolutionary sequence.

Early Mammal Brain:

Dr. Terrence Deacon: Yes. I think that there are probably many thresholds that we are talking about and early language-like behavior might look very different. I think clearly early language-like behavior had to involve much less vocalization because the brains that preceded us, mammal brains, are not well suited to organizing sound in precise, discrete and rapidly produced learned sequences.

This is something that mammal brains, in effect, are poorly designed to do precisely because the system we use to produce sound is a system that normally should be running on autopilot so that we can breathe appropriately, so that we don’t choke, and so on and so forth. There’s literally been a change in that circuitry to override those systems in order for language to be possible.

What was language in the past, or what corresponds to language homologically, as they say in biology, the homologues to language might have looked very different. Not a signed language, necessarily, but some very complicated combination of modalities. This makes it very hard to predict what the effects might have been and how those effects were layered upon layer to produce new kinds of languages, new kinds of brains.

Episodic and Procedural Memory:

Dr. Terrence Deacon: For me, one of the things I think is really exciting about languages is this aspect of how it reflexively changes the way we think. I think that’s one of the most amazing things about being a human being.

When people talk about memory they usually talk about two kinds of memory. Episodic memory, remembering a particular thing that happened, a sort of one off event in your past; and then something called procedural memory, the kind of memory that’s involved in skill learning.

The problem with one-off memory is that there’s so many one-off events, how do you find them again in your memory? The problem with procedural memory is you’ve got to be in the same situation. I know how to ride a bike when you put me on a bike, but if you ask me to tell you exactly which leg I put on first, how do I start out, how do I stay up, I couldn’t tell you because it’s, in a sense, imbedded in its context.

Language is unique in the following sense: that it uses a procedural memory system. Most of what I say is a skill. Most of my production of the sounds, the processing of the syntax of it, the construction of the sentence, is a skill that I don’t even have to think of. It’s like riding a bicycle. I don’t even have a clue of how I do it.

On the other hand, I can use this procedural memory system because of the symbols that it contains, the meanings and the web of meanings that it has access to, that are also relatively automatic, to access this huge history of my episodes of life so that in one sense it’s using one kind of memory to organize the other kind of memory in a way that other species won’t have access to without this.

The result is we can construct narratives in which we link together these millions and millions of episodes in our life in which you can ask me what happened last month on a particular day and if I can think through the days of the week and the things I was doing when, I can slowly zero in on exactly what that episodic memory is and maybe even relive it in some sense.

Verbal, Self-Reflexive, Volitional Memory Recall:

David Boulton: This is a very powerful point. You mentioned before the comparative unnaturalness of this. That at one level we could say that there was, a priori this level of self-reflexive volitional memory recall, a more organismic memory that was less about ‘me’ remembering and more about re-member-ing me, reassembling me relevantly to the situation that I’m in in nature. So we’re talking about having a verbal, self-reflexive, volitional memory access and control system that little children are picking up when they are two, three and four years old. How well they pick up this complex skill, which is, in the long view of evolution, radically unnatural to their brain, is almost fate determining.

Dr. Terrence Deacon: Clearly, it changes everything about what being a human being is. Again, I use the phrase, the symbolic species, quite literally, to argue that symbols have literally changed the kind of biological organism we are. Not just in evolution but in day-to-day life. I think we operate fundamentally different at a cognitive level because of this.

Yet, with just a few tweaks we’re essentially African apes. We’re just a few tweaks away from an African ape. Just enough. Herbert Simon coined this term, satisfycing. It’s not optimizing. It’s just enough to be able to do it. I look at us as an African ape that’s been tweaked just enough to be able to do this radically unnatural kind of activity: language.

How Old Language Is:

Dr. Terrence Deacon: Now, it turns out that if satisfycing has been going on for a long time in our evolution then it’s not so unnatural after all, because, of course, brains will have adapted to those demands. This sets up an interesting problem and it speaks to the problem of how old language is.

If language is new, if language is only a hundred thousand year old, or even less, a fifty or sixty thousand year old kind of process, then we should expect that it has had little effect on human brains – that whatever tweaks were used were, in a sense, clumsy kluges to make the thing work. We shouldn’t expect that it’s easy, that it’s fluid and runs without difficulty.

On the other hand, if language has been around for a good deal of our evolutionary past, say a few million years, or even a million years, that’s adequate time for it to have structured and reshaped the brain to be better satisficed to the problem of processing and using language in real time.

Similarly, language will have adapted. We will have adapted this language process to be better fit to our own constraints as we go along. The two will, in a sense, be in tandem, converging towards each other.

The question one has to ask from the present time looking back is: Are we well adapted to this? Are we really a symbolic species or is this just a kluge added on top of a primate brain not well designed for this? I think the evidence for the age of this probably can be best picked out by comparing to something quite relevant to what we are discussing, and that is writing and reading.

Writing and Reading:

Dr. Terrence Deacon: Writing and reading occurred recently. Therefore, whatever we use to do this was a kluge, that is, an engineering patchwork that just sort of barely gets by. We are not well designed to do so and as a result a lot of people have difficulty acquiring reading and writing. There are many kinds of difficulty and many people will never be able to read or write because their brain hasn’t had a chance, so to speak, to keep up with this process.

If language itself were like that we should expect to find those kinds of problems with our ability to acquire language. In fact, I think the surprising feature is that not only is language incredibly complex, it is acquired rapidly in our development, even when children are quite young. Reading, of course, takes quite a while to acquire; you have to wait until your brain has matured a ways.

But even more interestingly, people with significant brain damage at birth nevertheless do better at language than any other species on the earth, under intense training. That suggests to us that the system has even been over designed a little bit to be prepared with a kind of redundant system in case things go wrong.

Young children with the whole left hemisphere damaged or removed at a very early age can nevertheless acquire language with half a brain, so to speak. The wrong half, for that matter. That again suggests the system is somehow over designed. That can only occur if there’s been a long evolutionary history, I think, in which language has played a significant role, suggesting to me that at least, language-like processes are not new, are not recent.

David Boulton: So, you are making a distinction between the comparative unnaturalness of language becoming second nature to us over a vast period of time and the clearly recent invention of writing that we see a few thousand years ago.

At some point in our language development we evolved speech, which over time folded back on itself to become this self-reflexive awareness system. Now that seems to be the big threshold. When did we begin speaking? Any idea in your own view when that happened? Isn’t that comparatively recent, in the past hundred thousand years spectrum? Don’t our fossil records indicate that the anatomy of our jaws and throat wouldn’t allow for speech until relatively recently?

Vocalization and the Larynx:

Dr. Terrence Deacon: The issue of vocalization, I think, is an important one because, when we look at the brains and the vocal tracts of non-human mammals they are quite different than ours. For me, the vocal tract itself is a secondary feature, though it plays a role. It plays a role in the kinds of sounds that we can produce – the diversity in the range of phonation that we produce as the range of vowels mostly. Even more troublesome is the anatomy of the control of the larynx.

One of the things that I and a colleague, a graduate student of mine, Alan Sokoloff, pursued over a decade ago was trying to understand the connections between the fore brain and the structures of the larynx and the tongue crucial to language. It turns out that very likely our ancestors, the australopithecines, and of course before them, had, like other mammals, a relatively disconnected control of the larynx and even of the tongue, to some extent. By that I mean that there was probably not much voluntary control over vocalization and certainly not at the level at which you could stop and start it on a dime, so to speak, with very little effort associated with it.

That changed over the course of evolution and I think we have some clue to that because I think that there’s good reason to suspect that the change in the size of the brain had something to do with the change in control of that system. The secondary signal for that which we actually can see in the fossil record is probably the change in the base of the cranium of the skull and its shape and what it tells us about the position of the larynx. Its role in producing sound, of course, wouldn’t be selectively favored if there wasn’t already a need to produce sound in an articulate way. In other words, the production of vocal speech has to, in a sense, precede and drive and co-evolve with the sound production system externally.

By the time we begin to see this, and this as we now know precedes human beings, precedes anatomically modern humans going back to the time of homo erectus and the early homo sapiens type, we see some of these changes already occurring. That suggests that already vocalization was being used in a way different probably than other species.

In this regard, speech, that is the transfer of this communicative task to sound, was probably a slowly evolving, gradually improving feature. Now why should speech take over from the arms and hands? Well, obviously your arms and hands could be used for other things and it would be nice to free them up. On the other hand, it’s also in some ways easier to mimic the sounds.

One of the problems in learning language is that we have to learn the sounds of language. To learn a manual display, one has to take in the display that we see and then do a mirror image reversal, because for me to do it I have to take your perspective and do it that way. That’s an extra cognitive step that makes copying true mimicry of behavior a little difficult. However, many species, especially bird species, mimic sound. Because what we hear is not all that different from what we hear when we produce it, we don’t have to go through that extra step.

It is probably that reason among others that are involved in why speech took over this function more and more over time. I don’t think it’s a case of it suddenly taking over. I think that the evidence we have suggests that it probably took over gradually. As speech becomes more and more an issue there may well be different demands in processing time, in short term memory, and so on and so forth, that go along with it. As we begin to change this system, the whole system has to change around it. Again, all of this makes the study of the evolution of language somewhat more difficult than doing a just so story about when it might have occurred.

Reading Overlays Speech:

David Boulton: The production of speech, the way that we produce speech, the degree of coordination between all these different systems that, as you said before, were at one point running on automatic and became conscripted to the communication system in order to articulate and process communications through sound, didn’t this change the shape, time, frequency, packet sizes, whatever you want to call it, of the information exchange that’s going on?

Also, wouldn’t you think that speech had the benefit that you didn’t have to see it?

Dr. Terrence Deacon: Yes.

David Boulton: You could be across the tree from each other. You didn’t have to look at each other and be able to see each other’s gestures to be able to communicate so you could coordinate at a greater distance. But relative to where our story goes ultimately, one of the things that we’re talking about, of course, is that reading, at least initially, when children are developing the skill, has to overlay speech. So, it has to work inside the constraints of the existing language processing infrastructure. It has to take this code and simulate the assembly of the speech processes that generate virtually heard or actually spoken word sounds.The timing of how this construction has to work has to fit inside of the timing constraints and structural constraints we evolved to process speech. What have you discovered in the past decade, when you were doing this kind of research, or subsequently, that casts any light on the timing coordination of the different component parts involved in this dance called speech?

How Writing Systems Evolved:

Dr. Terrence Deacon: The timing is not something that I have done direct work on. However, something that’s relevant to that is that I think we can learn quite a bit about the neurological problem by looking at how writing systems themselves have evolved. In a social sense it’s evolving in response to changes that stuck or didn’t stick in these various systems. The few times that language has turned into writing, become externalized in the world, almost all began with a kind of pictorial mode. The problem with pictures is that, of course, they’re not like sound, not like language. The advantage of pictures is that they’re much closer to what they represent, in some sense. We can even look at some of these ancient writing systems and make some educated guesses and it turns out usually they’re wrong for interesting reasons. One of those reasons is that almost as soon as picture-like writing came about people began to use the pictures to also represent sounds, the name of things, a rebus, like you find in a coke bottle cap, in which you’re trying to figure out the meaning of something by using a picture to represent a sound also.

Most of the pictorial writing systems very quickly took on sound iconism, that is sound likeness as well. The word for that thing has a sound and I can use that sound element to put together with other sound elements. What happened was that kind of system got co-opted very quickly. Now in one sense you can say, well this is a disadvantage because the interpretation of the meaning might be easier if you could see the picture, if you got something about the picture of it right away, because vision is one of the ways we remember objects and relationship. On the other hand, it’s broken up into bits and pieces, it’s separated. And of course it’s quite distant from speech. Speech has recoded this in a whole different way. What seems to have happened in most of the world’s written forms, not all of them, but even those that still have a bit of pictorial nature to them, even have acquired this feature, and that is, that they have become representations of the speech stream itself, and only secondarily of something in the world.

What that tells us is that these have become adapted to us. That is, our constraints – the things that we do well automatically. They’re not any longer adapted to what we do easily, but are not so flexible and facile as language, which has this kind of evolutionarily instantiated support system that makes it relatively effortless. So, something that is more encoded than a picture is actually easier for communication in this regard. It’s still a kluge, it’s still an external invention for which brains weren’t well designed. I think the evolution of writing systems is a hint to what the brain design is. They’re not perfect, they’re also satisfices. There are also other factors that are influencing them but they are evolving towards a better fit to what we do automatically and easily.

David Boulton: In your book you make an interesting correlate to this point and that is that the evolution of language is more reflective of the child’s ability to learn it rather than the adult’s ability to use it. This is something that creates quite a contrast because I think that’s not the case with writing systems. Writing systems are an invention of adults for adults. There hasn’t been the same degree of evolutionary loop concerning itself with how well it fits the child.

Acquiring Language:

Dr. Terrence Deacon: Yes. I think one of the really interesting things about writing is how long it takes before we’re mature enough, before our brain is ready to acquire it and to do it easily because the learning process there is clumsy. With respect to speech or even sign languages, the learning process is already set up. From a very early age, in fact, we’re able to do things that, if one was just to look at the complexity of the task, would seem infinitely more complex than interpreting these letters and figuring out how letters map to sounds. I think that that’s a real paradox that makes the reading and writing problem stand out.

There’s another feature to this in which writing has adapted to some of the constraints of adults using it, of course, whereas speech and signing have probably adapted more to children acquiring it. The reason I say that is that the only languages that can be passed on down effectively and efficiently are those that can be picked up quickly and easily at the youngest possible age.

Why at the youngest possible age? Because if you have to use this for everything you do, to some extent you want to acquire it in a way that’s most suited to your brain, in which most of your resources have been organized with respect to it. Early on brains are quite plastic, quite flexible. The result is the more experience with language we have very early, the better we are at it as adults. Those who have been deprived in one way or another of that experience are quite poor in their linguistic abilities as adults, some never acquiring some of the features of language.

So, to some extent, the very specialization that we have for acquiring language and acquiring it at an early age amplifies this difference in human brains. That brains come in to the world, so to speak, expecting to be bombarded with language at an early age as part of its organizing principle, and that human brains that don’t get that experience, in effect, are not getting the normal nutritive experience of their expected evolutionary anticipation of this kind of environment that they’re about to fall into.

David Boulton: Back to our story and another place that we can plug in here. Building on a point from a moment ago, we talked about language adapting itself according to how learnable it is to young children because that’s what’s selecting it, and so they’ve grown together – that’s where the co-evolutionary linkage is the most powerful between humans and language.

Dr. Terrence Deacon: Right.

David Boulton: But writing systems are adult inventions that are on the other side of this language learning barrier, on the other side of this abstract self-reflexiveness, and it requires different kinds of processing than is natural to the child or to spoken language acquisition.

One of the things that’s most amazing to me and about what we are wanting to bring to light about our writing system is the degree of disambiguation and assembly that has to happen to convert these letters into sounds. I don’t know if you’ve ever looked at this, but any one letter has a field, it’s almost like a quantum field of potential sounds that collapses in the context of a series of letters that must be buffered in memory and that can go for many seconds into the future. In other words, what determines the particular letter in one word may be a particular letter in a word many words downstream from the letter as the eye/mind registers it. And yet this whole dance of assembly, disambiguation, and clarification has to happen fast enough so that the whole thing emerges in us like we’re talking to ourselves.

Dr. Terrence Deacon: Right.

David Boulton: So, the brain has evolved to process language in ways that the writing system has to artificially simulate and co-opt. Is there anything in the – I know you said before, timing wasn’t a particular area that you played around with – but in your exploration of writing systems, pictographic and others, have you ever encountered any comparisons, between different modalities of writing and their effects upon or requirements of the brain?

Writing Codes & Computer Operating Systems:

Dr. Terrence Deacon: The analogy that comes to mind is the analogy of the various kinds of computer operating systems that have occurred over the past few decades – one of the things that I think we often times use as a model for language, and is typically the model that many linguists take home. When you’re trying to come up with a rule system to describe a natural language it turns out to be enormously complicated, lots of tweaks and special cases and rules that are very arcane that no one really knows themselves – that one has to discover in the study of language.

As a result, I think many people have looked at language as though it’s this kind of engineered, clumsy interface that we have to acquire. If you look at it like a computer interface written by engineers that has to be used by non-engineers, it looks as though it’s this impossible thing to learn – that it takes a whole lot of work to do it. The result is, it looks as though you have to have special training in advance to do it. Before you get there you have to have gone through the class, you have to have read the manual, and then you can figure it out, or you have to have the crib sheet in your brain to begin with.

On the other hand, when we looked at the evolution of computer interfaces we found very quickly that people realized that one has to change that interface so it’s better suited to the users, and very quickly we saw something just the opposite of the literary problem and that was that text oriented interfaces were rapidly replaced by object oriented iconic interfaces. In some ways, working with things in the world and treating the computer screen and the contents of the computer as though they were physical objects we could move around in a way that was much more natural.

There’s something radically unnatural, ultimately, in reading off the computer screen, figuring out, decoding what it meant, figuring out how to use other words to accomplish what you wanted the computer to accomplish, to instruct it because, in fact, it required all these extra encoding and decoding steps. We made computer interfaces more natural and they’re progressively getting more and more natural so that little kids, in effect, can pick it up quite easily; where we couldn’t have expected twenty years ago any child to pick up an operating system that was run with a carrot and a word to start the thing out.

The interesting feature of that is that to some extent writing is still like that early computer interface. It requires these complicated learning processes in which we learn the sounds first, then we learn the words. We eventually learn to make these complicated decodings and we probably automate a lot of it so we actually don’t even see the letters much anymore as we read through it. This is why when I look at my own writing I often times don’t catch my own typos. I’ve automatically begun to just see things as whole words or, in fact, even whole phrases. I look past them because they’re so easy for me.

Now the problem with that, of course, is that for those who haven’t gone through that process, who haven’t read the manual and haven’t memorized the operating system to begin with – it’s an enormous task just to get started, just to go to square one, to be able to use the machine, in this case reading and writing. So, I think what hasn’t happened is that system has not evolved to the level that it becomes natural, spontaneous in the way that, for example, computer interfaces have. Does that mean we will ever come up with a writing system that does that? Maybe not. Because, in fact, to represent sound is not a trivial matter. We don’t represent sound in the icons on a computer screen precisely for that reason. It’s so complicated it takes a lot of decoding.

David Boulton: I’m often amazed that when computer engineers try to dissect some aspect of human behavior, as if it could be analyzed in engineering terms. It seems so absurd on so many levels, language being one of them. The idea that a center-fielder could hear the crack of a bat and run to some place deep in center field and catch the ball over their head without looking back. I mean there’s no machine in the world that could figure out how to do that or coordinate all that would be necessary from that sound, that acoustical signature, in that way. So, there’s a lot of things that human beings do that defy all of our sense of being able to break it down and put it into some kind of engineer’s logic of how it might be reproducible.

The Brain's Logic:

Dr. Terrence Deacon: Exactly. One of the things I want to say about that is that I think it’s interesting that in the history of recent linguistics we have modeled language processing on a Turing Machine. The original generative grammar that Noam Chomsky pioneered and that made a radical advance in the field is modeled on the way computers work, that is a simple set of instructions and rules to generate something. Almost certainly that’s radically different than the way our brains work. The question is, can we use that descriptive tool, that engineering model, to go back and try to figure out how brains are doing this? Or, in fact, does that engineering model actually get in the way of figuring out what the brain’s logic is as opposed to Turing Machine logic?

David Boulton: That depends on whether it’s an exploratory observational lens or whether we’re taking it as the frame that we use to see reality through. It’s how we approach using the model. Back to the writing systems – what’s so amazing to me that I’m trying to get at in the series is the neurological challenge because it’s so time precarious.

Dr. Terrence Deacon: Right.

David Boulton: There’s a mounting complexity of the ambiguity involved. As you said when you’re reading, well I have the same experience: I’m reading along something I’ve written and my mind is priming as I see a letter and I’m guessing the rest of it. It’s revealing certain strategies about how we construct things.

Dr. Terrence Deacon: Right.

David Boulton: It’s available to all of us that look. If those processing strategies mis-form early it creates havoc because it’s very difficult to get back to them to reform them.

Automatization:

Dr. Terrence Deacon: I think the crucial problem with all of language as we use it today is the problem with automatization. How do we take something that has so many variables, so many possible connections and combinatorial options, and do it without having to think about it? How do we turn this complicated set of relationships into a skill, ultimately, that can be run, in effect, as though it was a computation?

What we’re really doing is we’re taking something that is a nightmare for computation and as we mature both as language users but also as readers and writers, we have to automatize it so we don’t have to think about those details. The problem with automatization is that at any step, if you’ve got a slowdown step, if any piece of that enterprise has a block, where you can’t hold enough of the information, the whole house of cards falls apart. You can’t build beyond that point.

It looks as though, with the acquisition of language itself, speech particularly, that we’ve got a lot of redundancy in the system. We have work arounds that are there, probably evolved there, because it was so important and because there was enough evolutionary time behind that process to build in that safety net. With respect to reading and writing, there was no evolutionary support. With respect to reading and writing, there is no safety net. There are probably very few redundant work arounds that are successful that don’t take longer, that aren’t more clumsy – aren’t so clumsy that they drop something, they don’t keep track of it, or they’re simply too slow to keep up with your memory process.

David Boulton: Right. We stutter.

Dr. Terrence Deacon: Right.

David Boulton: We stutter and when the stutter happens during this assembly, you get this start-stop hesitation that after a while creates this frustration and reading aversion and all that can go with it.

Dr. Terrence Deacon: Right.

Language as a Collective Phenomenon:

David Boulton: This is analogous to embryology in the sense that what we’re talking about here is there’s this injection, back and forth process going on. Almost at another level, it’s like there’s this parabolic inversion from the ‘whole to one and one to whole’ in the way that these two systems work.

Dr. Terrence Deacon: Yes. That’s right.

David Boulton: Language itself is a property of a collective, not an individual.

Dr. Terrence Deacon: Right.

David Boulton: I’m not so sure that ‘affects’ aren’t, at another level of conversation.

Dr. Terrence Deacon: I think you’re right.

David Boulton: These are properties, not of an individual organism that you can pull out of the context of a group or family or tribe or society or culture or however you want to cut it. It’s not a property of an abstract individual. It’s a property of a collective.

Dr. Terrence Deacon: I think we have real difficulty conceiving of causality in systems. We want to find the cause in a single thing. We want to reduce the cause to an invention in history, a famous man who makes something happen, to a discovery or to a single gene that makes it happen or some biological accident that makes it happen. We have a real difficulty when cause is distributed, when the cause is in many places at once in which the consequence is the result of a convergence of these influences, no one of which is the cause.

Language, of course, is exactly that kind of situation. Language is a case in which it doesn’t make sense for an individual to have a language. A unique individual language is a non sequitur, a non starter. It’s something that is systemic from the beginning. In the same sense, what we’ve learned about evolution and what we’ve learned about embryological development is they are also systemic. They’re not instructed from single genes that say you must be this shape. They’re the result of the genes setting in motion competitions, growth processes, interactions, communications, and out of those relationships emerge the structures that we see in embryos. Similarly, out of the relationships of ecosystems come the various niches and organism designs.

Learning:

David Boulton: This is precisely how I define learning. I like to look at learning as this process that we’re describing now rather than the singular acquisition process that it’s often defined as being. Like you’re saying, we are unfolding and differentiating and extending ourselves, all of which is another way of defining learning. So, we’re unfolding into differentiation in a way that’s creating a more differentiated, more complex social environment which then becomes the context that we’re unfolding in.

Dr. Terrence Deacon: Even when we learn individual words we don’t, as a sort of caricature would suggest, we don’t hear the word, ask somebody what it means, and know automatically how to use it. We do sometimes ask people what things mean but usually we learn words in context. We learn what words mean by progressively differentiating their meaning, by catching the nuance, the connotation, the way they fit with things. And we know when somebody doesn’t quite understand the meaning of a word, especially a technical word, because they often times use it in a sort of rote way that’s out of context, in which it misses some crucial connections because they haven’t, in fact, differentiated it.

David Boulton: Tell the story in your book about your years of experience in this field and how you were stumped by the eight year old…I thought it was so beautiful in the book and I think I want to include it, because it touches on children and language.

A Child's Question:

Dr. Terrence Deacon: What prompted me to write the book, and prompted a lot of my thinking was not the readings of experts in the field but, in fact, an encounter with an eight year old in one of my son’s classes. I went in as a dad, telling the classroom what dad does for a living to give kids an idea of different occupations and that sort of thing. I talked about my studies of the brain, my interest in language and so on. In that process I said the standard things such as that language is a unique form of communication that only humans do, and we must have a lot of changes in our brain that make it possible, that we’re specialized for this purpose and that we are the only species that does this.

This prompted a young kid to say, “Well, but don’t dogs and cats have language?” Dog language and cat language and bird language and so on? And I said, “Well, metaphorically yes, they communicate, but their way of communicating is very different than ours.” I went on to explain those differences but that didn’t seem to satisfy this questioner who came back again and said, “Okay, I get it. So, they have simple languages and we have complicated languages.” I thought for a second and I said, “No, it’s not like that. It’s not that they’re simple languages, they’re very different from language. There aren’t things like verbs and nouns, and so on and so forth. Language is really quite different and the way it combines things is quite different. So it’s not really simple, it’s complicated.”

For some reason that was a troubling answer as well. The question that came back to me was, “Why? Why don’t animals have simple languages?” I managed to get myself out of that question by sort of confabulating the way an adult does, and said, “Well, of course we don’t understand all of those things and what a very complicated question” – then moving on.

Simple & Complicated Languages:

Dr. Terrence Deacon: But that question why really bothered me. It bothered me for a simple reason and that is that all of the explanations that we had cooked up for this didn’t seem to answer that simple question. If language was just very complicated and you needed a really good brain with lots of learning devices to acquire it, then animals ought to have simple languages. But in fact, they have something very different than languages, a whole lot more like laughter and sobbing and smiles and frowns, which we also have. A simple language also wouldn’t require you to have a kind of crib sheet in your brain, a language device, a universal grammar that allowed you to say, ‘Oh, it’s one of those. I got that already.’ Because a simple language wouldn’t need all that support because you wouldn’t have to worry about it.

There wasn’t, in the standard explanations of intelligence, of special language devices, and so on and so forth, any story that answered the reverse question – not why we have language, but why aren’t there simple languages out there? Why don’t animals just slightly less intelligent than us have just a slightly simpler language? And those less intelligent than them have a slightly simpler language than that? Or why aren’t there dozens of languages with different language devices, in different species, that are all like language but very, very different and not inter translatable? We don’t find something like that. That suggested to me that we were on the wrong track.

Emergence of Writing:

Dr. Terrence Deacon: You might say the minimal size to produce language in the same way we would talk about the minimal conditions to produce writing in human societies. I think they are similar kinds of problems. What has to happen to produce writing as far as we can tell by looking at those examples that we’ve seen are that writing emerges in complex societies, with dense populations, with what amount to city-like organization in which large numbers of people have to be organized and controlled. Often times trade seems to be involved in this.

David Boulton: Almost of the earliest artifacts of writing we have are trade related.

Dr. Terrence Deacon: Yes, trade related. Although in Central America it may not be quite so simple as that. It may be more around religious communication and ritual organization. But it could well also be paying of something like taxes and tithes.

David Boulton: Right. Wampum and what have you.

Dr. Terrence Deacon: Right. What we see is there is not just a number of people, but in a sense, certain communicative demands that show up with numbers, with a coordination problem of exchanging things and keeping things organized and particularly maintaining the differentiation of roles in that population. I think that when we go back to look at the origins of the first symbolic communication, and by this I mean something well before what we would recognize as language, but communicating things in the abstract, communicating things that are not necessarily in the here and now, but naming, discussing things and representing things that might be or were or could have been. In that process one needs a social organization that’s already creating that need. In some sense the social organization must have been moving in that direction.

Sense of the Not Here, Not Now:

David Boulton: Was there an abstract sense of the not now before there was language to mediate it?

Dr. Terrence Deacon: I think there was not necessarily a sense of the not now. The question is do you invent words to represent something you’re thinking, or do the words help you think things that you couldn’t have thought before you had them?

David Boulton: If you look at the optimal path for children learning words, and in my personal experience and observation, it’s at the very reaching edge of the differentiation or distinction that’s being made that the word can now act as a label.

Dr. Terrence Deacon: Right, and I think the acquisition of language as well as it’s early evolution has this same dynamic. Again, it’s a kind of a co-evolutionary dynamic in which the context is demanding it, in which the support is nearly there to produce it, in which the inside and the outside are both ready for a convergence on this result. The question about the origins of language really is what unusual social context becomes the support for creating a kind of communication that is a non sequitur in evolution that was not there before?

Of course, whenever we do this, we’ve moved into story telling. We’ve moved into just so stories, to what I call scenarios. I don’t think scenarios are science, but I also don’t think that you can do science without a little bit of story telling just to shoehorn your thinking in that direction. To do what Dennett calls ‘intuition pumps’, and I like that concept. So, I think that our story telling is useful. I think it probably will never tell us what actually happened because a story is not how evolution works, it’s not a narrative, it’s a statistical process. Nevertheless…

David Boulton: It creates a space for us that we wouldn’t have opened otherwise. This is Einstein’s ‘imagination is more important than knowledge’.

Dr. Terrence Deacon: That’s right. Exactly. And so for me, what I try to look at in human evolution is what unique social context is there in our ancestors. Not what need they had to make tools, not what need they had to hunt, necessarily. These are things that other species can do, and other species do in combination with each other.

Critical Cooperation:

Dr. Terrence Deacon: For me, the issue begins to make sense when you think about a shift into using meat. Not necessarily hunting, this could be just scavenging for meat. What happens is a number of serious problems. I think it’s the first real serious division of labor problem, because females with young infants are not wise to be sitting out on a kill site on the open savannah. That’s not a place where you want to keep your young infants who are making noise, fussing, and tending to run away in hiding because there are going to be other scavengers and predators out there at the same place.

There’s going to need to be some kind of cooperation around that. Which means that males are going to have to be in some sort of agreement with each other because they’re defending each other at this kill site in order to get the meat. It may be that it’s just long enough to be able to cut off a leg or something from this downed animal and run up a tree with it, where no other animals can chase you for the moment. But that sets up a whole series of reproductive problems, I think, that didn’t occur before that.

David Boulton: The core of that point is there is some realization that we’re better off cooperating.

Dr. Terrence Deacon: The cooperation is crucial. In other words, the story of language has to be about cooperation. I don’t think it’s as driven by social competition, like we see in other species though many people have suggested that of course we use language a lot to do Machiavellian kinds of analyses and to trick each other and take advantage of each other. No question that’s true. But is that the environment that would have generated the evolution of language that is a means of communication that depends upon social agreement? It seems to me unlikely. It doesn’t seem to me that that means it can’t be used for that. In fact, it almost certainly seems everything we have gets used for our competition.

So, the social problem that I see that derives from this is we’ve got this difficult issue of needing to cooperate while sexually we’re competing. Animals in groups are always in sexual competition with each other. Who gets to mate with whom is the crucial problem when you are in a social group. And the closer and the tighter that social group, the more individuals that have to be superimposed together, the more difficult negotiating that becomes.

David Boulton: Negotiating implies language evolving to differentiate cooperation from competition.

Negotiation and Learning Together:

Dr. Terrence Deacon: So, the problem of negotiation, especially in a group that has to cooperate, is that we have to find ways of representing the ‘what if’. It’s not that we’ve thought of the ‘what if’, necessarily. But there are alternatives that aren’t there now. What if I’m not here? Will this change the mating strategy? What if I’m off at the kill site and there are other males back here? Can I rely on females to avoid them? Can I rely on that male to respect the fact that I’m the dominant animal? I’m not there to defend my mating access. Will that change things? What can I rely on?

I think what we can rely on, and what we still rely on is what amounts to the gossip and the tattletale side of things. That is, we use other eyes who know that there are agreements, that we have expectations, and that we have come to some sort of agreement to keep things on the up and up. Keep things even, so to speak, so that we can both cooperate and compete in some sort of a balance that doesn’t tear the group apart.

I think that’s spontaneously generated by this context of moving into the meat niche. I think that has to be negotiated by finding ways of externally representing the ‘what if’. In a sense, it’s like a social contract story. It’s not about marriage, per se. It’s simply about negotiating this complicated cooperation-competition balance.

David Boulton: One level in all of this that seems obvious but not generally well understood is that what makes humans so powerful is our ability to learn together. Not only do we individually learn in ways that are off the scale of other creatures, but we collectively learn. We can learn to collaborate, we can learn to do things together. Our civilization, everything, the good, bad and the ugly of it all are connected to this.

Dr. Terrence Deacon: Yes.

David Boulton: Thank you so much Dr. Deacon – its been a great pleasure speaking with you.

Dr. Terrence Deacon: Thank you for having me.

Social Pressure Drives Language:

David Boulton: Whatever the pre-spoken language forms human beings may have evolved with, they were a reflection of the collective not the individual, of people being together in ways that the complexity of their collective behaviors gave rise to the selective pressure towards this communication, towards language in a way that other species didn’t. Aren’t there other species that get together in group sizes and do complex things that could have promoted language in ways similar to humans?

Dr. Terrence Deacon: Well, of course there are many social insects that are in very large groups and they do have complicated communication. It’s typically one off communications, that is they have particular odors, particular sounds perhaps that they produce to organize themselves. Then, of course, instinctual responses when they encounter a movement, a sound, an odor from some other individual to produce their behavior. We get very complex social organizations. One might say, ‘Well don’t look at those creatures. They have very small brains.’ Of course they’re doing it differently. They’re more like little tiny computers solving this problem.

We do have animal groups, mammal groups and bird groups that can actually be in fairly large numbers interacting. For example, birds that have to nest in limiting areas, large herd animals that work together, large schools of fish that seem to coordinate their activities, and flocks of birds that control their activities in the numbers of a hundred. It’s not unusual to find large groups of social species carefully integrated in their interaction. For me, the size of the social group is not necessarily a telling feature. I think that there is a kind of minimal size of social group necessary to produce the kinds of pressures to generate language.

David Boulton: Necessary, but insufficient.

Dr. Terrence Deacon: That’s right.

David Boulton: So then we move to what do you think were the cultural pressures, beyond a certain minimally necessary but insufficient thereafter size, that would have promoted language?